Science fiction did a great job preparing us for submarines and rockets. But it seems to be struggling lately. We don’t know what to hope for, what to fear, or what genre we’re even in.

Space opera? Seems unlikely. And now that we’ve made it to 2021, the threat of zombie apocalypse is receding a bit. So it’s probably some kind of cyberpunk. But there are many kinds of cyberpunk. Should we get ready to fight AI or to rescue replicants from a sinister corporation? It hasn’t been obvious. I’m writing this, however, because recent twists in the plot seem to clear up certain mysteries, and I think it’s now possible to guess which subgenre the 2020s are steering toward.

Clearly some plot twist involving machine learning is underway. It’s been hard to keep up with new developments: from BERT (2018) to GPT-3 (2020)—which can turn a prompt into an imaginary news story—to, most recently, CLIP and DALL-E (2021), which can translate verbal descriptions into images.

I have limited access to DALL-E, and can’t test it in any detail. But if we trust the images released by Open AI, the model is good at fusing and extrapolating abstractions: it not only knows what it means for a lemur to hold an umbrella, but can produce a surprisingly plausible “photo of a television from the 1910s.” All of this is impressive for a research direction that isn’t much more than four years old.

On the other hand, some AI researchers don’t believe these models are taking the field in the direction it was supposed to go. Gary Marcus and Ernest Davies, for instance, doubt that GPT-3 is “an important step toward artificial general intelligence—the kind that would … reason broadly in a manner similar to humans … [GPT-3] learns correlations between words, and nothing more.”

People who want to contest that claim can certainly find evidence on the other side of the question. I’m not interested in pursuing the argument here. I just want to know why recent advances in deep learning give me a shivery sense that I’ve crossed over into an unfamiliar genre. So let’s approach the question from the other side: what if these models are significant because they don’t reason “in a manner similar to humans”?

It is true, after all, that models like DALL-E and GPT-3 are only learning (complex, general) patterns of association between symbols. When GPT-3 generates a sentence, it is not expressing an intention or an opinion—just making an inference about the probability of one sentence in a vast “latent space” of possible sentences implied by its training data.

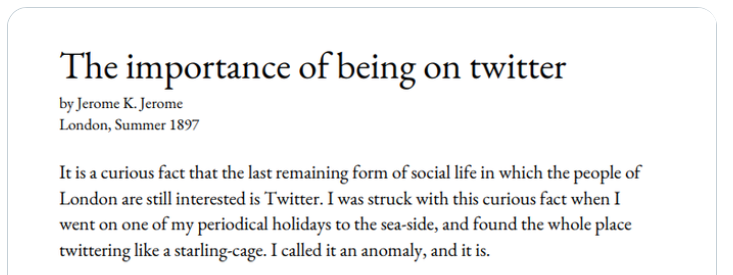

When I say “a vast latent space,” I mean really vast. This space includes, for instance, the thoughts Jerome K. Jerome might have expressed about Twitter if he had lived in our century.

But a latent space, however vast, is still quite different from goal-driven problem solving. In a sense the chimpanzee below is doing something more like human reasoning than a language model can.

Like us, the chimpanzee has desires and goals, and can make plans to achieve them. A language model does none of that by itself—which is probably why language models are impressive at the paragraph scale but tend to wander if you let them run for pages.

So where does that leave us? We could shrug off the buzz about deep learning, say “it’s not even as smart as a chimpanzee yet,” and relax because we’re presumably still living in a realist novel.

And yes, to be sure, deep learning is in its infancy and will be improved by modeling larger-scale patterns. On the other hand, it would be foolish to ignore early clues about what it’s good for. There is something bizarrely parochial about a view of mental life that makes predicting a nineteenth-century writer’s thoughts about Twitter less interesting than stacking boxes to reach bananas. Perhaps it’s a mistake to assume that advances in machine learning are only interesting when they resemble our own (supposedly “general”) intelligence. What if intelligence itself is overrated?

The collective symbolic system we call “culture,” for instance, coordinates human endeavors without being itself intelligent. What if models of the world (including models of language and culture) are important in their own right—and needn’t be understood as attempts to reproduce the problem-solving behavior of individual primates? After all, people are already very good at having desires and making plans. We don’t especially need a system that will do those things for us. But we’re not great at imagining the latent space of (say) all protein structures that can be created by folding amino acids. We could use a collaborator there.

Storytelling seems to be another place where human beings sense a vast space of latent possibility, and tend to welcome collaborators with maps. Look at what’s happening to interactive fiction on sites like AI Dungeon. Tens of thousands of users are already making up stories interactively with GPT-3. There’s a subreddit devoted to the phenomenon. Competitors are starting to enter the field. One startup, Hidden Door, is trying to use machine learning to create a safe social storytelling space for children. For a summary of what collaborative play can build, we could do worse than their motto: “Worlds with Friends.”

It’s not hard to see how the “social play” model proposed by Hidden Door could eventually support the form of storytelling that grown-ups call fan fiction. Characters or settings developed by one author might be borrowed by others. Add something like DALL-E, and writers could produce illustrations for their story in a variety of styles—from Arthur Rackham to graphic novel.

Will a language model ever be as good as a human author? Can it ever be genuinely original? I don’t know, and I suspect those are the wrong questions. Storytelling has never been a solitary activity undertaken by geniuses who invent everything from scratch. From its origin in folk tales, fiction has been a game that works by rearranging familiar moves, and riffing on established expectations. Machine learning is only going to make the process more interactive, by increasing the number of people (and other agents) involved in creating and exploring fictional worlds. The point will not be to replace human authors, but to make the universe of stories bigger and more interconnected.

Storytelling and protein folding are two early examples of domains where models will matter not because they’re “intelligent,” but because they allow us—their creators—to collaboratively explore a latent space of possibility. But I will be surprised if these are the only two places where that pattern emerges. Music and art, and other kinds of science, are probably open to the same kind of exploration.

This collaborative future could be weirder than either science fiction or journalism have taught us to expect. News stories about ML invariably invite readers to imagine autonomous agents analogous to robots: either helpful servants or inscrutable antagonists like the Terminator and HAL. Boring paternal condescension or boring dread are the only reactions that seem possible within this script.

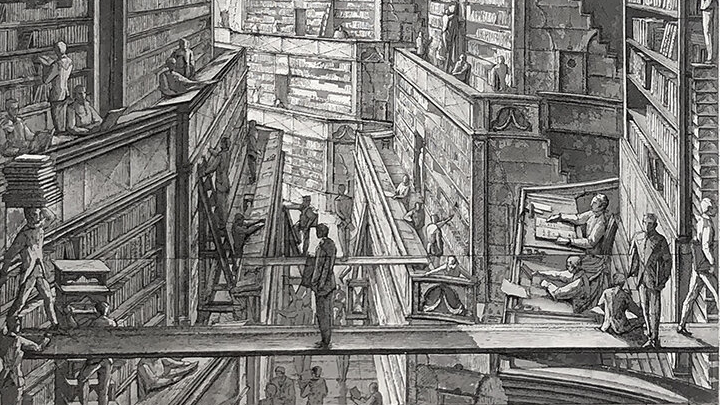

We need to be considering a wider range of emotions. Maybe a few decades from now, autonomous AI will be a reality and we’ll have to worry whether it’s servile or inscrutable. Maybe? But that’s not the genre we’re in at the moment. Machine learning is already transforming our world, but the things that should excite and terrify us about the next decade are not even loosely analogous to robots. We should be thinking instead about J. L. Borges’ Library of Babel—a vast labyrinth containing an infinite number of books no eye has ever read. There are whole alternate worlds on those shelves, but the Library is not a robot, an alien, or a god. It is just an extrapolation of human culture.

Machine learning is going to be, let’s say, a thread leading us through this Library—or perhaps a door that can take us to any bookshelf we imagine. So if the 2020s are a subgenre of SF, I would personally predict a mashup of cyberpunk and portal fantasy. With sinister corporations, of course. But also more wardrobes, hidden doors, encylopedias of Tlön, etc., than we’ve been led to expect in futuristic fiction.

I’m not saying this will be a good thing! Human culture itself is not always a good thing, and extrapolating it can take you places you don’t want to go. For instance, movements like QAnon make clear that human beings are only too eager to invent parallel worlds. Armored with endlessly creative deepfakes, those worlds might become almost impenetrable. So we’re probably right to fear the next decade. But let’s point our fears in a useful direction, because we have more interesting things to worry about than a servant who refuses to “open the pod bay doors.” We are about to be in a Borges story, or maybe, optimistically, the sort of portal fantasy where heroines create doors with a piece of chalk and a few well-chosen words. I have no idea how our version of that story ends, but I would put a lot of money on “not boring.”