Key Takeaway:

Online algorithms on social media platforms amplify information people are biased to learn from, leading to social misperceptions, conflict, and the spread of misinformation. This mismatch between human psychology and algorithm amplification can result in functional misalignment, leading to incorrect perceptions of the social world and the spread of misinformation. Research on this topic is in its infancy, but new studies are emerging to examine key components of algorithm-mediated social learning. To foster accurate human social learning, researchers are working on new algorithm designs that increase engagement while penalizing PRIME information.

People’s daily interactions with online algorithms affect how they learn from others, with negative consequences including social misperceptions, conflict and the spread of misinformation, my colleagues and I have found.

People are increasingly interacting with others in social media environments where algorithms control the flow of social information they see. Algorithms determine in part which messages, which people and which ideas social media users see.

On social media platforms, algorithms are mainly designed to amplify information that sustains engagement, meaning they keep people clicking on content and coming back to the platforms. I’m a social psychologist, and my colleagues and I have found evidence suggesting that a side effect of this design is that algorithms amplify information people are strongly biased to learn from. We call this information “PRIME,” for prestigious, in-group, moral and emotional information.

In our evolutionary past, biases to learn from PRIME information were very advantageous: Learning from prestigious individuals is efficient because these people are successful and their behavior can be copied. Paying attention to people who violate moral norms is important because sanctioning them helps the community maintain cooperation.

But what happens when PRIME information becomes amplified by algorithms and some people exploit algorithm amplification to promote themselves? Prestige becomes a poor signal of success because people can fake prestige on social media. Newsfeeds become oversaturated with negative and moral information so that there is conflict rather than cooperation.

The interaction of human psychology and algorithm amplification leads to dysfunction because social learning supports cooperation and problem-solving, but social media algorithms are designed to increase engagement. We call this mismatch functional misalignment.

Why it matters

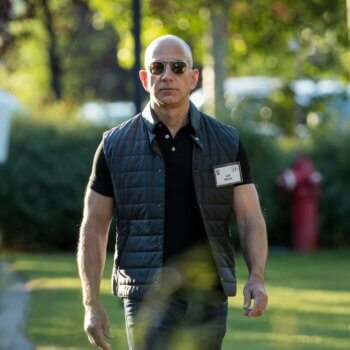

One of the key outcomes of functional misalignment in algorithm-mediated social learning is that people start to form incorrect perceptions of their social world. For example, recent research suggests that when algorithms selectively amplify more extreme political views, people begin to think that their political in-group and out-group are more sharply divided than they really are. Such “false polarization” might be an important source of greater political conflict.Social media algorithms amplify extreme political views.

Functional misalignment can also lead to greater spread of misinformation. A recent study suggests that people who are spreading political misinformation leverage moral and emotional information – for example, posts that provoke moral outrage – in order to get people to share it more. When algorithms amplify moral and emotional information, misinformation gets included in the amplification.

What other research is being done

In general, research on this topic is in its infancy, but there are new studies emerging that examine key components of algorithm-mediated social learning. Some studies have demonstrated that social media algorithms clearly amplify PRIME information.

Whether this amplification leads to offline polarization is hotly contested at the moment. A recent experiment found evidence that Meta’s newsfeed increases polarization, but another experiment that involved a collaboration with Meta found no evidence of polarization increasing due to exposure to their algorithmic Facebook newsfeed.

More research is needed to fully understand the outcomes that emerge when humans and algorithms interact in feedback loops of social learning. Social media companies have most of the needed data, and I believe that they should give academic researchers access to it while also balancing ethical concerns such as privacy.

What’s next

A key question is what can be done to make algorithms foster accurate human social learning rather than exploit social learning biases. My research team is working on new algorithm designs that increase engagement while also penalizing PRIME information. We argue that this might maintain user activity that social media platforms seek, but also make people’s social perceptions more accurate.