I’ve been thinking about collectives in technology for a while. Collectives in the sense of organisation, and collective in the sense of shared, social value, distinct from individual benefits. In tech, that simultaneously means products and services which serve shared needs, not just individual ones; Doteveryone’s research into digital attitudes last year showed that people were increasingly feeling that the internet had been generally good for them personally, but much less good for society. It also means shared ownership and control of technology. Ownership and control matter. This means getting away from services that are withdrawn because of a strategy change at a company in another country, or where a handful of engineers in Silicon Valley get astonishingly rich whilst the millions who use a service and generate that wealth simply get the service, or where an investor drives a business model change that is good for getting a company to an exit, but not good for users.

We can see the shared value in tech, as distinct to the personal user value, in several ways. At a prosaic level, we need to think about dynamic groups of people when designing technologies for settings where groups of people are — such as the connected home (check out Alex Deschamps-Sonsino’s excellent book on this). Putting users first is not the answer to everything, as Cassie Robinson wrote last year — we need to consider relationships and outcomes, too. Software-driven cars are not just about the person using them to get from A to B, but the people they pass, the town they travel through, the needs of pedestrians and cyclists, considerations of noise and clean air. It enables us to realise values which make sense collectively but not individually. For instance, privacy — we benefit from a society where we have a right to a private life, even if some of us have nothing to hide. (There’s a recent article exploring the idea of privacy as a commons.)

Last Friday I was at the British Library for the UK launch of Douglas Rushkoff’s new book, Team Human.

The event was OK — a very receptive audience for Rushkoff’s ideas, although a very mixed bag of questions in the discussion hosted by George Monbiot. I was struck by the contrast between Rushkoff’s call for empathy and understanding even for the ‘other’, for instance, the person in the Make America Great Again hat, and Monbiot’s description of our UK political leadership as ‘psychopaths.’ The audience loved it, of course, being clearly overwhelmingly against Brexit, but we need to find ways to relate to and engage with the other side of that debate and not just resort to insults. (This has also frustrated me in some of the People’s Vote campaigning — an opportunity to bring people together around a shared goal of a meaningful vote on specific realistic options seemed to me to become more focussed on Remainer goals, creating more division.)

If the event had one weakness, it was a powerful call to action, but the only practical action suggested seemed to be engaging with local politics — showing up for the council meetings and so on. A good idea indeed, but a very small step on the road.

Team Human is a rallying cry for a more collective and collaborative approach to our world, getting away from inappropriate competitiveness and isolation, focussing on the key things that make us human, and calling for solidarity in the face of technology, political division, and climate change. Rushkoff says that we have lost touch with important human values through neoliberalism and a focus on the individual, and that we are, in these things, going against our true natures. The book is written in many small sections, grouped under bigger themes, which is an unusual style and helped keep a sense of momentum. There is quite a bit on technology — a frustration with the focus on regulating Facebook, when the real issues are in the underlying structures below the technology; the destructiveness of our obsession with growth, for both money and tech.

A few passages in the book struck me. On automation and AI:

The real threat is that we’ll lose our humanity to the value system we embed in our robots, and that they in turn impose on us.

Right. If we use these tools to optimise for today’s bureaucratic, efficiency-centric business-minded world, we’ll keep getting more of that.

Our values — ideals such as love, connection, justice, distributed prosperity — are not abstract. We have simply lost our capacity to relate to them. Values once gave human society meaning and direction. Now this function is fulfilled by data, and our great ideals are reduced to memes.

I think people may have had equivalents to memes, in this sense, for some time — songs, jokes, catchphrases. But certainly we seem to have lost the integration of values like these into some parts of everyday activities.

We must learn to see the technologically accelerated social, political, and economic chaos ahead of us as an invitation for more willful participation. It’s not a time to condemn the human activity that’s brought us to this point, but to infuse this activity with more human and humane priorities. Otherwise, the technology — or any system of our invention, such as money, agriculture, or religion — ends up overwhelming us, seemingly taking on a life of its own, and forcing us to prioritize its needs and growth over our own. We shouldn’t get rid of smartphones, but program them to save our time instead of stealing it. … This will require more human ingenuity, not less.

This isn’t about not having nice things — it’s about having even nicer things, and doing the extra work to make that a reality.

The discussion touched on climate change quite a bit; this was the first day of UK children going on climate strike, following Greta Thunberg’s lead and school strikes elsewhere.

Phenomena such as climate change occur on time scales too large for most people to understand, whether they’re being warned by scientists or their great-grandparents. Besides, the future is a distancing concept — someone else’s problem. Brain studies reveal that we relate to our future self the way we relate to a completely different person. We don’t identify that person as us. Perhaps this is a coping mechanism. If we truly ponder the horrific possibilities, everything becomes hyperbolic. We find it easier to imagine survival tactics for the zombie apocalypse than ideas to make the next ten years a bit better.

It seems a very long time since a group of us tried to tackle some of that problem at Serious Change, where amazingly some web presence survives. One of our guiding questions was: how do we make climate issues relatable and actionable for the Daily Mail reader? Despite working with amazing people, such as David MacKay, we made little headway.

I was intrigued by the distinction between revolution and renaissance, especially as applied to digital technologies:

Revolutionaries act as if they are destroying the old and starting something new. … So the digital revolution — however purely conceived — ultimately brought us a new crew of mostly male, white, libertarian technologists, who believed they were uniquely suited to create a set of universal rules for humans. But those rules — the rules of internet startups and venture capitalism — were really just the same old rules as before. And they supported the same sorts of inequalities, institutions, and cultural values.

A renaissance, on the other hand, is a retrieval of the old. Unlike a revolution, it makes no claim on the new. A renaissance is, as the word suggests, a rebirth of old ideas in a new context. That may sound less radical than revolutionary upheaval, but it offers a better way to advance our deepest human values.

So what values can be retrieved by our renaissance? The values that were lost or repressed during the last one: environmentalism, women’s rights, peer-to-peer economics, and localism.

The book ends with a positive sense that we can change things and make a difference:

But the future is not something we arrive at so much as something we create through our actions in the present. Even the weather, at this point, is subject to the choices we make today about energy, consumption, and waste. The future is less a noun than a verb, a thing we do. We can futurize manipulatively, keeping people distracted from their power in the present and their connection to the past. This alienates people from both their history and their core values. Or we can use the idea of the future more constructively, as an exercise in the creation and transmission of values over time. This is the role of storytelling, aesthetics, song, and poetry. Art and culture give us a way to retrieve our lost ideals, actively connect to others, travel in time, communicate beyond words, and practice the hard work of participatory reality creation.

As more people grapple with the ideas of tech and AI, and try to imagine different futures from that suggested by the developments thus far in the latest wave of AI development (and hype), there have been several efforts to use science fiction as a way to explore new ideas. Doteveryone commissioned Women Invent the Future, to surface different perspectives to those in the predominantly male tech workforce; the Centre for the Future of Intelligence is looking at global AI narratives.

It seems to me that if we are concerned less with technology, than the underlying drivers of finance and power, control and social values, then perhaps we should be looking at alternative narratives of the future for these. Where are the stories revolving around co-operatives, solidarity, thriving public and common goods? If we generally build what we dream about in AI, surely the same is true of how we organise things.

Maybe we need more visions of a collective future, so we can be inspired to build it.

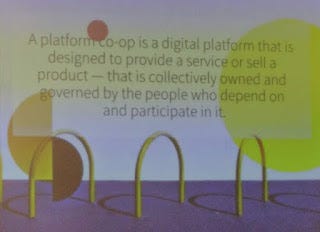

Tuesday saw the launch of a new report from Nesta and Co-operatives UK, illustrating a new model to provide capital risk financing to platform co-operatives. The report includes some useful background on platform co-ops plus examples of the different kinds, as well as the outline of a community-shares type model. (The report is now included in my somewhat messy collection of funding and support resources for co-ops, Zebras and other equitable businesses.)

The event also launched the start of a £1m fund to provide seed support for UK platform co-ops. There are plans for a roadshow to raise awareness with entrepreneurs and to understand the opportunity for this model going round tech hubs and cities around the country (although a couple of us pointed out that targeting earlier stage startups, before they are locked in to an organisational type and business model, might be worthwhile too). I’m optimistic that this forms part of a growing set of funding options for co-ops, although it’s still dwarfed by the mainstream investment market (or, as John Bevan pointed out, the collective wealth of co-ops worldwide, who could be doing more to support new co-ops, in line with Rochdale principle 6).

Both Nesta and Co-operatives UK made noises about growing the UK platform co-op movement although it was unclear whether there’s much resource or energy for this. There’s still very little awareness of alternative models to traditional angel/VC backed businesses in the tech entrepreneurship community — even social enterprises, which have a purpose alongside making profit, are a fairly scarce idea. It’s definitely important that we look to grow the movement beyond a small hardcore of tech co-op types. Even regular co-ops are very invisible in the UK, with the exception of ‘the’ Co-op [supermarkets] and the unfortunate disaster of the Co-Operative Bank.

Whilst funds are scarce, perhaps we can try collaboration to reduce costs. Admittedly most of the VC investment in big platforms goes on marketing (and subsidising the cost to the consumer, which in essence is marketing — securing more users), but some goes on tech development. How can we foster shared capabilities and reduce the capital needed for software)? Many platforms — or at least, many platforms within a given category, such as worker platforms — will have similar technology needs. It would make sense to have open source, reusable components at the start of these ventures, reducing the risk capital up front, rather than waiting. How could we catalyse collaborative, co-creation of such components before each new co-op develops its own custom stack? Might this be an opportunity for, well, someone? James Plunkett is discussing charity needs more broadly and suggests an arm’s length agency — an Office for Social Infrastructure — established in law but operationally independent from government, and tasked with licensing government-developed capabilities to charities, perhaps for free or subsidised, with guarantees over data privacy and independence. Maybe the same body could also pool resources to build new shared capabilities, in consultation with the non-profit sector.

David Bent’s write up of the event is great, and really hits the nail on the head with the question of user value. The ethical purchaser alone is not going to make the difference here — co-ops need to offer real value to the user beyond just being co-operative in structure.

The “slow tech” or “artisanal internet” as WIRED puts it in a recent article is nice, but it’s going to have to work very hard to compete with the big platforms. Nonetheless, we can’t just use our phones less and hope for the best (Rushkoff’s talk dismissed the Centre for Humane Technology on roughly these grounds, as truly humane technology would be nothing like the systems we have from Silicon Valley today).

In the face of unchecked power, sometimes you want to do more than resist. — WIRED

I think the reason for hope here is that we should not be trying to rebuild today’s technologies, but building wholly new things, using the alternative ownership and incentive models possible with co-ops and other equitable business structures. Tomorrow’s internet services might be very different from what we have today — more distributed, perhaps; holding data more locally; doing something well for one community rather than trying for global scale. They might build on today’s systems — perhaps improving the technologies already so old we sometimes forget they are there, like email.

We should also be able to architect our systems better, with the collective knowledge of several decades of internet stuff, designing in standards, interfaces, and governance at the right levels to achieve more beneficial outcomes for us all, individually and together.

About the Author

This article was written by Dr. Laura James. See more of her work.