Key Takeaways:

- The black box problem is a profound mystery surrounding AI.

- Not all machine learning systems are black boxes.

- Researchers observe what parts of the image are altered to make a more convincing result, thereby also learning what parts of the input are most important to the system.

- This has become equally fascinating and unnerving in the realm of art where machines are able to give the impression of a soul, bolts-and-binary masquerading as flesh-and-blood.

- But these explanations must be tested for accuracy.

- We already find it difficult to put trust in other humans, much less neural networks with which we are only just beginning to coexist.

The black box problem is a profound mystery surrounding AI. It says that we may understand the input, we may understand the output, but the AI’s decision-making process which bridges these two is a black box we can’t seem to peer inside. When given the task of helping diagnose patients, for example, why might an AI decide one patient carries the illness while another one doesn’t? Did the model diagnose the patient for legitimate reasons with which a doctor might agree? It’s easy to see why breaking into the black box is important in medical cases where lives are at stake. In other scenarios understanding the decision-making process can be important for legal reasons, for autonomous cars, or for helping programmers improve their machine learning models.

It’s not enough that a decision was made, we must understand how it happened. Now researchers are beginning to pry back the covering of the box, divesting it of its secrets. This is not unlike mapping the human mind.

Neural networks are, after all, loosely inspired by our brain. When your brain recognizes an object such as a horse saddle, a pattern of nerve cells will fire as a response to that stimulus. The visual of the horse itself will fire a different pattern of cells. So too do deep neural networks give certain responses depending on the input we feed them. But unlike humans, the neurons of a deep neural network are mathematical functions that receive input as numbers. The neurons take these numbers and perform calculations. If the calculation is able to reach a certain threshold (called a weight), the input is then passed on to a second set of neurons for more calculations and so on in a cascading effect. But machines also have a tool called “back propagation” that isn’t present in biology. Through back propagation information can go backwards through the network if the system is ever incorrect, helping it to better learn by sending data from the output layer back to the hidden layers for refinement.

Not all machine learning systems are black boxes. Their level of complexity varies with their tasks and the layers of calculations they must apply to any given input. It’s the deep neural networks handling millions and billions of numbers that have become shrouded in a cloak of mystery.

To begin uncovering this mystery we must first analyze the input and understand how it’s affecting the output. Saliency maps are especially helpful. They highlight which parts of the input — an image is a form of input, for example — the AI was focusing on most. In some cases the system will even color code the different parts of the image according to how much weight they held in making the final decision. AI usually takes into account not just an object itself but also the objects around it to help make identifications, much like using context clues to figure out the meaning of a word when you don’t know its definition.

Covering up parts of an image can cause the AI to change its results. This helps researchers understand why the system is making certain decisions and if those decisions are being made for the right reasons. Early autonomous vehicle AI would sometimes make left turns based on the weather because during training the color of the sky was always a particular color. The AI took this to mean that the sky’s romantic shade of lilac was an important component of making a left turn.

And what if to understand AI you enlisted the help of AI itself?

Generative adversarial networks (GAN) consists of a pair of networks that each play a different role. One will generate data, often in the form of images of everyday objects, and the other will try to uncover whether or not the data is real. The second network is essentially trying to answer whether an image of a strawberry was taken by a human or created by the first network. The first network is trained through this method to produce the most realistic images it can in order to consistently fool the second network. Researchers observe what parts of the image are altered to make a more convincing result, thereby also learning what parts of the input are most important to the system.

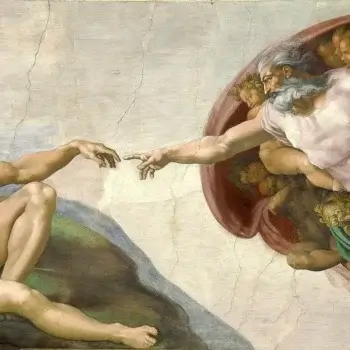

But the networks converse in secrecy — exactly how they work is an unspoken pact between the pair. How the first network is able to produce such convincing images is not yet well understood. GANs are able to create something that comes off as genuinely human: the picture of a face, or a poetic piece of writing. This has become equally fascinating and unnerving in the realm of art where machines are able to give the impression of a soul, bolts-and-binary masquerading as flesh-and-blood.

Researchers believe GANs are able to do this through specializing their neurons. Some neurons will focus on creating buildings, others plants, and others still features like windows and doors. For AI systems context clues are powerful tools. If an object contains something like a window then it’s statistically more likely to be a room than a rocky cliff. Having these specialized neurons means that computer scientists can alter the system in ways that will make it more flexible and efficient. An AI that has only ever detected flowers because they’re inside a vase can be trained to treat a clay pot as a vase if a computer scientist understands which parts of the model to alter.

These are the explanations we’ve settled on so far. But these explanations must be tested for accuracy. If we believe certain parts of an image play an important part in the AI’s final result then those sections of the image must be modified. The ensuing results should be drastically different. That is, not only must we evaluate the system itself to figure out what it’s doing, we must then evaluate our explanations to ensure that they are accurate. Even if explanations make sense to us that does not necessarily make them true. AI is like an alien that may have a human shape and human mannerisms but whose motives and reasoning don’t always follow human logic.

It’s a labor-intensive process, understanding neural networks. Not all researchers think it’s a worthwhile endeavor. This is especially true with more complex networks that some find an insurmountable challenge. The thinking is that they are out of our hands and all that’s left to do is trust in their decision-making process.

There is also the challenge of integrating AI into fields as influential as legislature and medicine. Human beings are fickle, wary creatures. We already find it difficult to put trust in other humans, much less neural networks with which we are only just beginning to coexist. Whether or not a neural network has a good explanation might not make much of a difference in inspiring trust.

But the relationship between human and AI is becoming increasingly inseparable. Just as AI systems need us to learn and evolve, so too are we beginning to learn from them. Biologists might use a system to make predictions about which genes play which roles in the body. By understanding how the system makes its predictions scientists have begun to see the importance of gene sequences they might otherwise have deemed insignificant.

Whether or not the AI’s black box ever becomes a glass box, the truth is that we’re already on a path of reliance. Neural networks are becoming more capable and more complex, leading many researchers to believe we’ll never have a thorough understanding of just how neural networks learn and create. Yet with or without an explanation the worlds of the human and the machine are deeply intertwined, each of us still a mystery to the other.