Explanations of machine learning are often either too complex or overly simplistic. I’ve had some luck explaining it to people in person with some simple analogies:

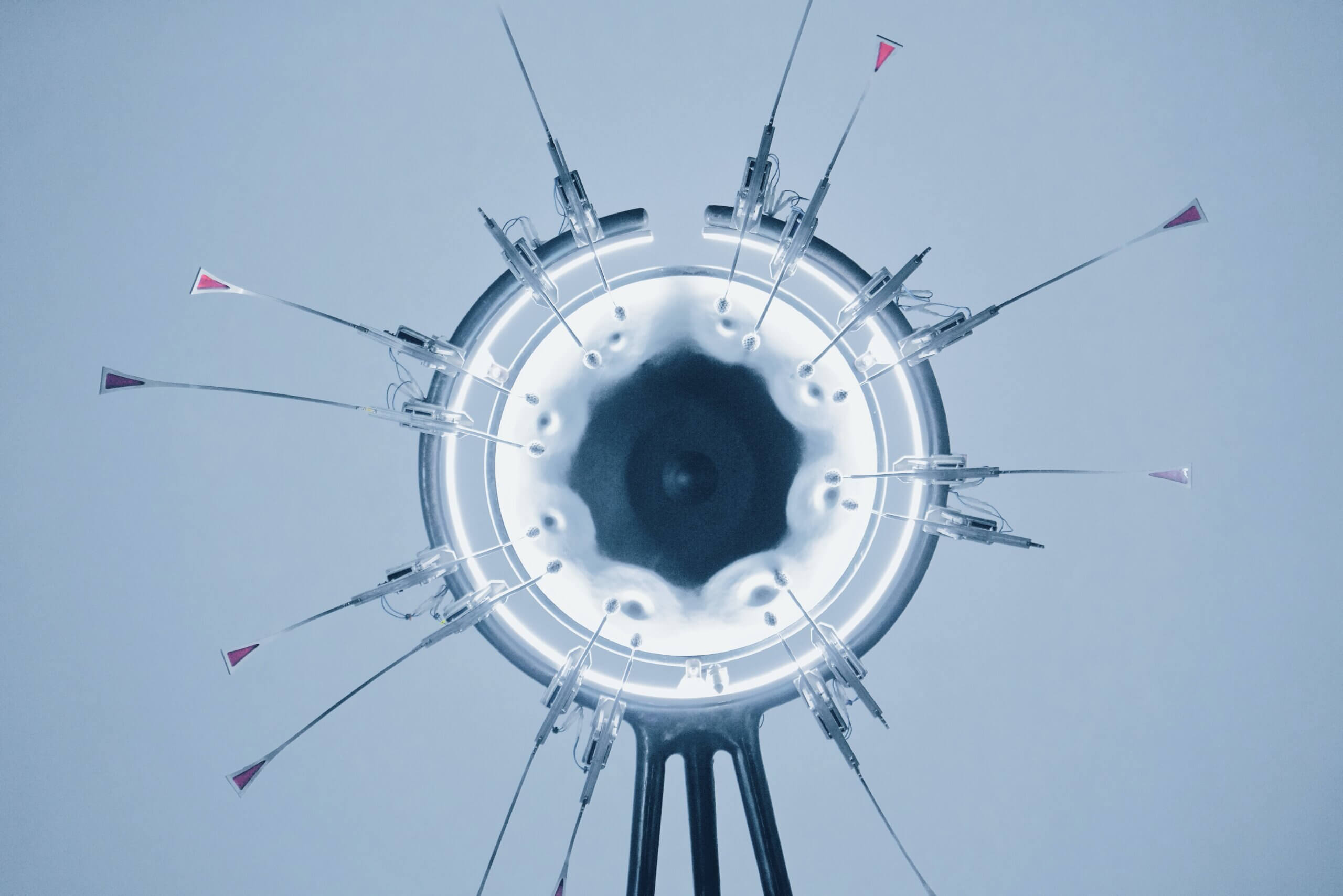

The Jet of Machine Learning

Scratch the surface, and you see that machine learning is basically a kind of ‘statistical thinking.’ We’ve long had tools for doing statistical analysis on data. Machine learning just automates that analysis so we can do it at much larger scale. The basic techniques have been around for decades, but machine learning didn’t really explode in popularity until just a few years ago with the advent of powerful new processors (Graphics Processing Units and later Tensor Processing Units) and large-scale data sets from Internet services like Google Search, Amazon and Facebook.

Andrew Ng makes the analogy that compute power is the jet engine and data is the jet fuel of machine learning. Rather than fly you to Chicago, this jet builds statistical models that draw on their underlying data to simulate reality, somewhat analogously to the way we simulate reality in our own brains. These algorithmic models extend our biological brains to help them do something they’re not really built for: thinking statistically.

Big Data and Models

Before this powerful new jet showed up, we were using machine learning to automate the building of statistical models. It saved a lot of time and energy over the labor-intensive statistical techniques we used to use, and that opened up interesting new applications, such as analyzing inventory levels in a warehouse, estimating the threat of over-fishing from commercial boats, and predicting stocks prices.

These kinds of applications are what is often described as “Big Data,” or data analytics. In this work’s early phases, the models were typically static, a kind of snapshot analysis of the underlying data. Despite this limitation, these techniques proved valuable for analyzing large datasets. That made them very popular in large corporations and resulted in a thriving ecosystem of data analytics companies.

Deepening the Automation

It’s worth calling out one of the specific tricks that we now use to automate building these statistical models. It’s called Deep Learning and it is a technique that has taken the machine learning world by storm. The reason Deep Learning is so popular is that it allows developers to automatically build models through exposure to large datasets. These neural networks have multiple layers, much the way animal brains do. The lower layers of these networks focus on identifying the simplest and most concrete features of a model, handing off their results to subsequent layers, which work on progressively more complex and holistic interpretations of the data. The below graphic from Nvidia illustrates an example of layers in a deep neural network for identifying cars, starting with rudimentary lines, moving to wheel wells, doors, and other car parts, and finally on to full cars.